The vast majority of modern security companies use machine learning (ML) models to accelerate processes and learning, automate defenses and other reasons. These machine-powered classification systems are an essential component in the battle against the fast-evolving malware threats. Given their widespread use in the technology industry, an obvious question arises: How does a company demonstrate true innovation in this field?

In the past this question has been answered in one of two ways: Some companies have tried to innovate by applying new and exciting machine learning algorithms. In this vein, we have seen complex neural networks and deep learning models in the market. Others have focused on features, datasets and labels or on solving the classification problem by collecting huge amounts of data about malware and letting the algorithms do their magic. Very few companies have combined both approaches.

Observing the direction other security companies have taken, we at ReversingLabs felt that something big was missing: No one was focusing on the human element. We found that there wasn't a solution in the market that tried to bridge the gap between detecting malware and helping analysts understand why such detections happened in the first place. Until today, that is.

The team at ReversingLabs is proud to unveil its novel approach to machine learning malware detection -- a system we call Explainable Machine Learning. It's the first machine learning-powered classification system designed primarily for humans.

Machine learning is a technology that, essentially, converts information and object relationships into numbers that quantify these properties. The very first step in implementing any such system is the conversion of human experience into a sequence of numbers that the machine understands and can learn from. Machines are far better at dealing with numbers than people who are meant to use them. That’s why using most of those ML/AI (artificial intelligence) systems feels to humans like its limiting and at times very challenging to understand. That is also why the very first question an analyst asks a machine learning expert is “why?”. Why did the machine present such a result? Or why was this object detected as malicious?

Explainable machine learning is built on ReversingLabs advanced binary analysis system that converts objects into human-readable indicators describing the intent of the code found within those objects. Regardless of what the analyzed object is, our static analysis system can go through all of its components and describe them in an easy-to-understand way - all in just a few milliseconds.

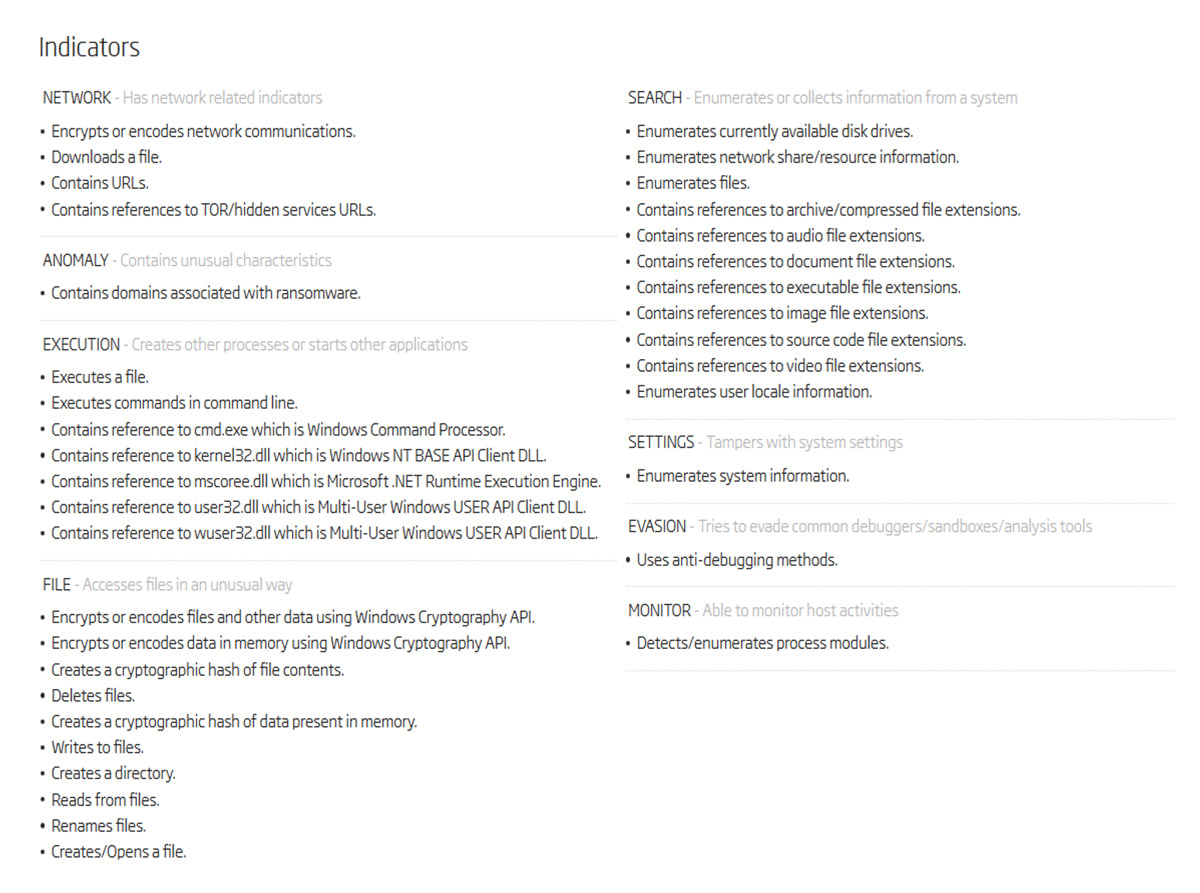

The following picture depicts how our static analysis system describes one ransomware malware family.

Ransomware example - Win32.Ransomware.Jaff

Indicators listed in the image above are the result of hard work by our reverse engineers and threat analysts who create rules that convert code and metadata properties into human-readable descriptions. Those rules are generalized and can describe any object type, whether it's a malware threat or not. So even if we can’t classify an object, these indicators can still accurately describe its behavior.

However, being able to describe something as ransomware and being able to detect it are two completely different things. This is where explainable machine learning comes in. Built solely upon these human-readable indicators, our explainable machine learning creates a novel malware detection system. It's a system with results that are always interpretable by a human analyst. The underlying idea is simple: if our system makes a classification decision, it must be able to defend that decision with a description it provides for the malware it detects. By turning this idea into a reality, we’ve put the human first and made the machine its ultimate companion.

Most machine learning classifiers are built from the top-down. Companies that implement them usually start by making simple classifiers that discern good from bad. This is done by data scientists that convert raw data to millions of features that are in turn extracted from millions of objects. Given enough compute power machine learning models are trained to use these floating point numbers to find optimal curves that split the dataset based on the selected labels.

Knowing good from bad is certainly the crux of malware detection, but it is not the most important answer a detection system must provide. Because the second question that an analyst will pose is “what?”. What did the system detect? The analyst's response to a threat posed by any malware is heavily dependent on the answer to this question.

That is why we’ve built an explainable machine learning system from the bottom up with the idea that declaring which malware type it has detected is its most important feature. Combined with the human-readable indicators, machine learning explainability means that the results must be logical. Human analysts must be able to read the list of provided indicators and agree that the detected malware type has had its functionality described correctly.

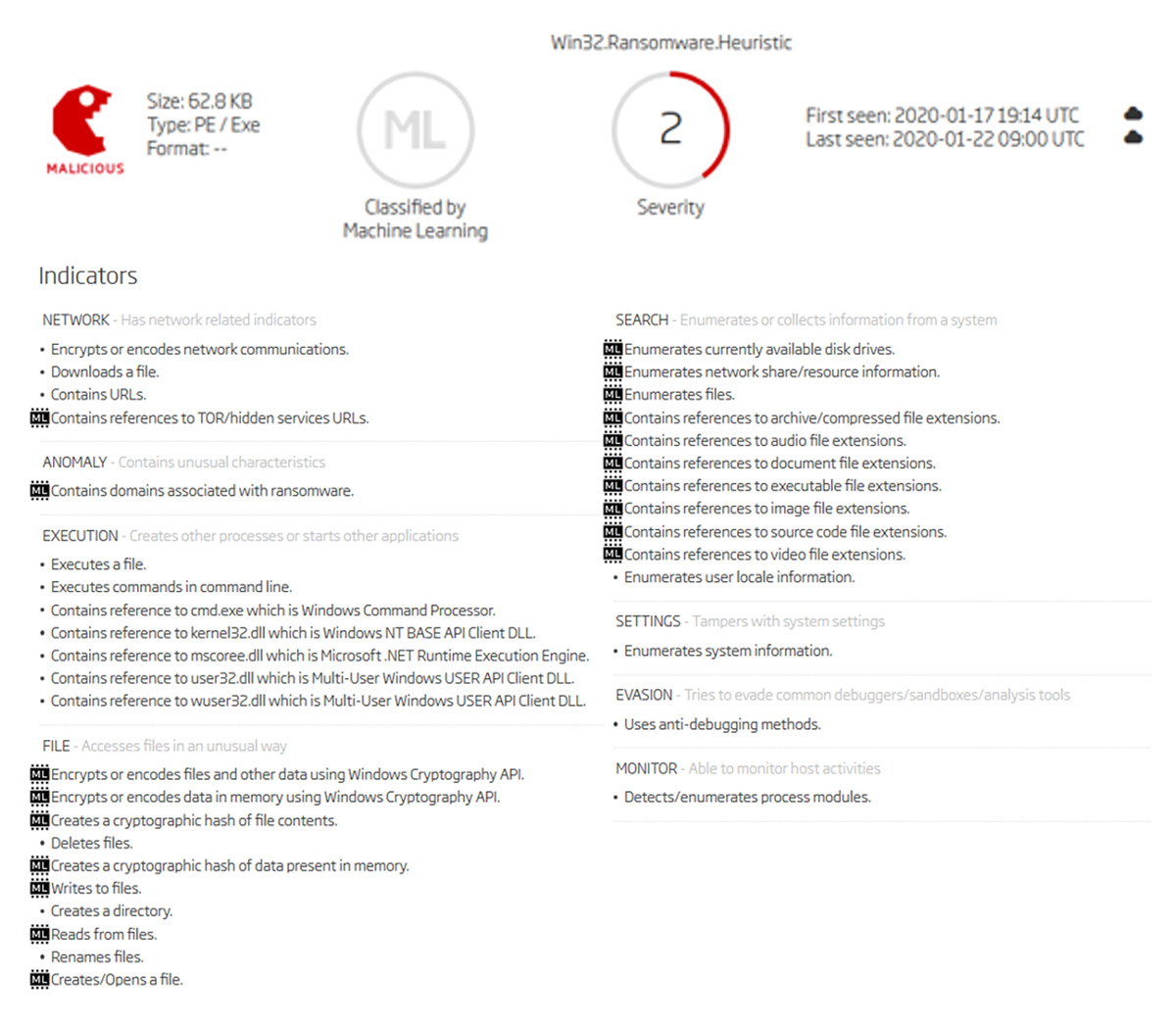

Certainly, not all indicators are created equal. Some contribute to malware detection. Knowing which ones is what makes our explainable machine learning model transparent in its decision-making. This final piece of the puzzle builds trust in the accuracy of our classification system which is exactly why we have exposed human analysts to our model's reasoning.

Ransomware example - Win32.Ransomware.Jaff

It's great to have explainable machine learning make sense on a single object. However, it takes placing it in the context of a large dataset to truly get the sense of it providing meaningful insights. This is especially difficult for most machine learning systems because they tend to be a by-product of the dataset they were trained on. If those datasets contain biases, it is very easy to create a model that is overfitted in a few narrow directions. Such design mistakes create models that put too much weight on a single feature - which makes them easy to bypass.

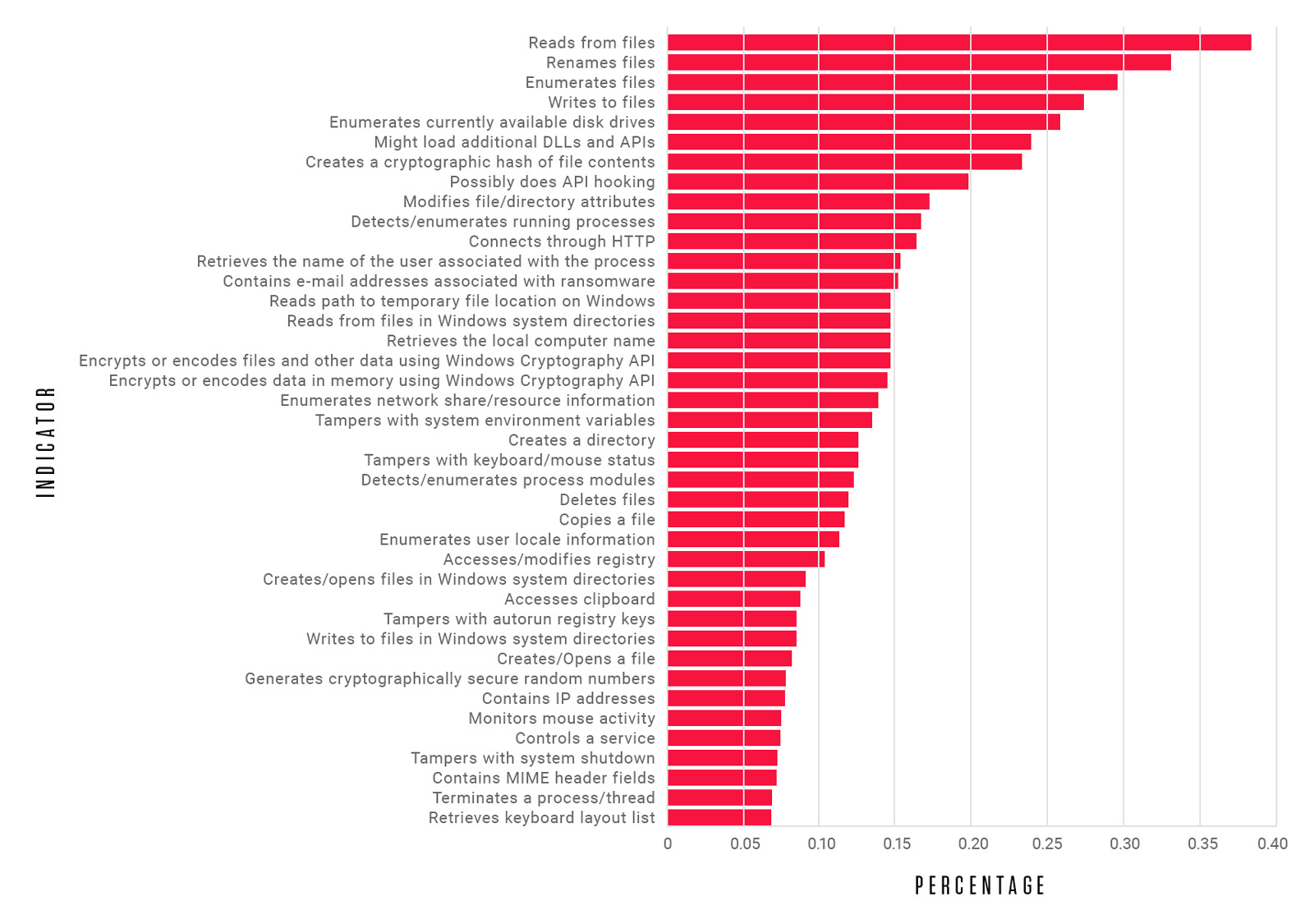

Monitoring the indicators ReversingLabs' model considers, the most significant contributors to malware type detection is the key metric that tracks the model's explainability. Breaking down the statistics of what our ransomware detection model sees as significant, across our entire ransomware dataset, produces the following chart.

Ransomware detection model statistics

Indicators above are the human-readable features that we’ve trained our machine learning model on. The horizontal axis reflects the percentage of ransomware families in our dataset for which the indicator has been seen as a significant classification contributor. Therefore, what this chart is trying to show is that there is a commonality between all ransomware families. This common denominator is the exact set of features an analyst would think of when trying to describe what ransomware does. On the other hand, uncommon features might not be statistically significant when observing this entire dataset, but they are equally important when deciding if an object is ransomware or not. It is these less frequently seen indicators that end up describing individual families, or even smaller clusters of our large ransomware dataset.

With explainable machine learning, interaction with indicators changes drastically. Transparency in the decision-making process highlights the most important malware family properties. That information is key for assessing the organizational impact that a malware infection has, and the starting point from which a response is planned.

Machine learning models are a great choice for the first line of defense. These signatureless heuristic systems do a great job of identifying if something is a malware or not, and even what type of malware that something is. Their detection outcomes are predictive, not reactive, and that makes detecting new malware variants possible. Even brand new malware families can be detected without models explicitly being trained on how to do so. In terms of reliability, they also require a fewer number of updates when compared to conventional signatures, and their effective detection rates decay slower.

Over the last two years, our team has been building explainable machine learning classifiers for the following malware threats: Ransomware, Keyloggers, Backdoors, and Worms. We have taken the biggest malware problems enterprises are facing today and created a detection system to protect them. Needless to say, this is just the beginning, as our aggressive malware detection roadmap speeds up and we vastly expand our classification capabilities.

Our team is excited to finally be at the stage where we can present our machine learning efforts. We hope to hear your impressions, fellow security practitioners and defenders, as you start using this intelligence in your daily battles. Please contact us for ways to give this exciting new technology a try.

Explore RL's Spectra suite: Spectra Assure for software supply chain security, Spectra Detect for scalable file analysis, Spectra Analyze for malware analysis and threat hunting, and Spectra Intelligence for reputation data and intelligence.